Decoding The Digital Jumble: Understanding Garbled Text Like "ë² ì³‡ 앨러"

Have you ever encountered strange, unreadable characters on your screen, a perplexing string of symbols that looks like an alien language, such as "ë² ì³‡ 앨러"? This digital jumble, often referred to as "garbled text" or "mojibake," is a surprisingly common phenomenon in our interconnected world. It's more than just an aesthetic annoyance; it's a fundamental issue rooted in how computers understand and display text, particularly across different languages and systems. Understanding why these cryptic sequences appear and, more importantly, how to resolve them, is crucial for anyone dealing with digital information, from casual web browsing to complex data analysis and web scraping. This article will delve into the fascinating world of character encoding, demystifying why text like "ë² ì³‡ 앨러" appears, and equip you with the knowledge to tackle these digital riddles.

In an era where information flows globally, text data often originates from diverse sources, created with different software, operating systems, and regional settings. When these pieces of text meet a system that doesn't interpret them correctly, the result is often a string of nonsensical characters. Far from being random, these garbled characters are usually a misinterpretation of perfectly valid data, a consequence of mismatched character encoding. Let's embark on a journey to unravel the mystery behind "ë² ì³‡ 앨러" and similar digital anomalies.

Table of Contents

- What Exactly is Garbled Text? The Case of "ë² ì³‡ 앨러"

- The Bedrock of Digital Text: Character Encoding

- Unicode Unveiled: Its Power and Scope

- Why Does Text Get Garbled? Common Causes of Encoding Errors

- Practical Solutions: Decoding the Digital Jumble

- Encoding and Web Scraping: A Critical Connection

- Preventing Future Encoding Headaches: Best Practices

- The Importance of E-E-A-T and YMYL in Data Handling

What Exactly is Garbled Text? The Case of "ë² ì³‡ 앨러"

Garbled text, often appearing as sequences like "ë² ì³‡ 앨러," is essentially correctly encoded data that is being *misinterpreted* by the system trying to display it. Imagine speaking a sentence in English, but the listener tries to understand it using the grammar rules of French. The sounds might be the same, but the meaning is lost, and the interpretation becomes nonsensical. In the digital realm, this happens when text is encoded in one character set (e.g., UTF-8) but decoded using another (e.g., ISO-8859-1 or a different legacy encoding). The specific string "ë² ì³‡ 앨러" is a prime example of this. When properly decoded from its garbled form, it reveals a meaningful Korean phrase: "베이츠 앨런," which translates to "Bates Allen." This clearly demonstrates that what appears as random characters is, in fact, a legitimate name or term that has simply fallen victim to an encoding mismatch. This type of error is not uncommon, especially when dealing with content from languages that use non-Latin scripts, such as Korean, Japanese, Chinese, or Arabic. The core issue lies in the fundamental way computers store and process text.The Bedrock of Digital Text: Character Encoding

At its heart, a computer only understands numbers, specifically binary (0s and 1s). To represent human-readable text, each character (like 'A', 'b', '7', '!', or a Korean hangul character) must be assigned a unique numerical code. Character encoding is the system that maps these characters to their corresponding numerical values, and then to the bytes that computers store and transmit. Without a consistent encoding scheme, digital communication would be impossible.ASCII: The Early Days of Text Representation

The earliest and most fundamental character encoding standard was ASCII (American Standard Code for Information Interchange). Developed in the 1960s, ASCII uses 7 bits to represent 128 characters, primarily English uppercase and lowercase letters, numbers, punctuation, and control characters. It was revolutionary for its time, allowing computers to exchange text reliably. However, ASCII's limitation quickly became apparent: it could only represent a tiny fraction of the world's languages. As computing became global, different regions developed their own "extended ASCII" versions, often using the 8th bit to add 128 more characters specific to their language (e.g., characters with diacritics for European languages, or Cyrillic characters). This proliferation of different 8-bit encodings (like ISO-8859-1 for Western Europe, or various Windows code pages) led to the very problem we see with "ë² ì³‡ 앨러." If a document was saved in one 8-bit encoding and opened with another, the characters would appear garbled because the numerical codes for specific characters differed across these regional standards.The Rise of Unicode: A Universal Language

The need for a single, universal character encoding system became paramount to overcome the "mojibake" nightmare. This led to the development of Unicode. Unicode aims to assign a unique number (called a "code point") to every character in every human language, including ancient scripts, symbols, and even emojis. It's not an encoding itself, but rather a vast character set. To store and transmit these Unicode code points, various encoding schemes were developed, the most prevalent being UTF-8 (Unicode Transformation Format - 8-bit). UTF-8 is a variable-width encoding, meaning characters can take up different numbers of bytes. ASCII characters (U+0000 to U+007F) are encoded using a single byte, making UTF-8 backward-compatible with ASCII. Characters from other languages, like Korean, require more bytes (typically 3 bytes for most Korean Hangul characters in UTF-8). This efficiency and universality have made UTF-8 the dominant encoding on the web today. When you see "ë² ì³‡ 앨러," it's often because a system expected one encoding (e.g., a legacy Korean encoding like EUC-KR) but received UTF-8 bytes, or vice-versa, leading to misinterpretation.Unicode Unveiled: Its Power and Scope

Unicode is more than just a character set; it's a monumental achievement in digital standardization. It provides a consistent way to represent text from virtually every writing system in the world. This includes: * **Basic Latin:** The familiar A-Z, a-z, 0-9. * **Extended Latin, Greek, Cyrillic, Arabic, Hebrew, Devanagari, etc.:** Characters from major world languages. * **CJK Unified Ideographs:** The vast character sets for Chinese, Japanese, and Korean. * **Symbols:** Currency symbols (like €, ¥, £), mathematical symbols, scientific notation, musical notes, game pieces, and many other types of symbols. * **Punctuation:** Standard and specialized punctuation marks. * **Arrows:** A comprehensive set of arrows (as mentioned in the data, "Arrows, basic latin, cjk symbols and punctuation, emoticons, enclosed alphanumeric supplement, enclosed alphanumerics, enclosed."). * **Emojis:** The ubiquitous graphical symbols that have become an integral part of digital communication. Emoji can be found in specific Unicode blocks, allowing for consistent display across devices. The "Data Kalimat" specifically mentions "Unicode accents with equivalent html entities.unicode block u+0392 to u+03c9, alpha is u+2c6d / u+0251 currency." This highlights Unicode's detailed organization into blocks, where characters of similar types or from specific scripts are grouped together. For instance, the Greek and Coptic block (U+0370–U+03FF) contains characters like alpha (α, U+03B1) and omega (ω, U+03C9), demonstrating Unicode's comprehensive coverage.Exploring Unicode Blocks and Symbols

Unicode's structured approach, dividing characters into "blocks," makes it incredibly powerful for developers and linguists alike. Each block covers a range of code points dedicated to a specific script or category of symbols. For example: * **Basic Latin (U+0000 to U+007F):** Contains the standard ASCII characters. * **Arrows (U+2190 to U+21FF):** Dedicated to various arrow symbols. * **Emoticons (U+1F600 to U+1F64F):** Where many popular emojis reside. * **Currency Symbols (U+20A0 to U+20CF):** Contains symbols like the Euro sign (€), Yen sign (¥), and many others. This systematic organization allows for efficient searching and manipulation of characters. As the "Data Kalimat" notes, "Unicode search will you give a character by character breakdown." You can "Type in a single character, a word, or even paste an entire paragraph" into a Unicode search tool to get details about each character, including its code point, HTML entity, and escape sequences. This is invaluable for debugging garbled text or ensuring correct character display. The ability to "Mouse click on character to get code" further simplifies this process, making it accessible for anyone working with multilingual text. "You will automatically get utf bytes in each format,Unicode is a character encoding system that assigns a code to every.,Code speeds up development quickly explore any character in a unicode string." These lines underscore the utility and efficiency that Unicode brings to text processing.Why Does Text Get Garbled? Common Causes of Encoding Errors

The appearance of "ë² ì³‡ 앨러" or other gibberish is almost always a symptom of an encoding mismatch. Here are the most common scenarios: 1. **Incorrect Encoding Declaration:** A document (like an HTML page or a text file) is created and saved with a specific encoding (e.g., UTF-8), but the system trying to read it assumes a different encoding (e.g., ISO-8859-1 or a legacy Korean encoding like EUC-KR). If the HTML header or server response doesn't explicitly state the encoding, browsers or applications often guess, leading to errors. 2. **Saving with the Wrong Encoding:** A user might open a file, make changes, and then save it without realizing that the text editor has defaulted to a different encoding than the original. This often happens when moving files between different operating systems (e.g., Windows, macOS, Linux) or older software. 3. **Database Encoding Issues:** Text data stored in a database might have been inserted with one encoding but retrieved with another, or the database itself might not be configured to handle the full range of Unicode characters, leading to corruption or garbling upon retrieval. 4. **Data Transmission Problems:** When data is sent over a network (e.g., via email, API calls, or web forms), if the sending and receiving systems don't agree on the encoding, or if the data is corrupted during transmission, it can result in garbled text. 5. **Web Scraping Misconfiguration:** As hinted in the "Data Kalimat" (referencing BeautifulSoup and crawling a Korean article), web scraping is a frequent source of encoding issues. Websites might declare one encoding but actually use another, or the scraper might not correctly detect or apply the appropriate encoding when fetching and parsing content. The example of "검사장 출신 로펌대표 성추행 폭로" (Prosecutor-turned-law firm CEO accused of sexual harassment) becoming readable after changing encoding and using BeautifulSoup is a perfect illustration of this.Practical Solutions: Decoding the Digital Jumble

Fortunately, once you understand the root cause, resolving garbled text like "ë² ì³‡ 앨러" becomes much more manageable. 1. **Browser Encoding Settings:** For web pages, most modern browsers automatically detect encoding. However, if you encounter garbled text, you can manually change the character encoding in your browser's settings. Look for options like "Text Encoding" or "Character Encoding" and try different common encodings like "UTF-8," "ISO-8859-1," or specific regional encodings (e.g., "EUC-KR" for Korean, "Shift-JIS" for Japanese). 2. **Text Editors and IDEs:** When working with text files, ensure your text editor or Integrated Development Environment (IDE) is configured to open and save files with the correct encoding, preferably UTF-8. Most advanced editors allow you to see and change the file's encoding. 3. **Programming for Encoding:** For developers, handling encoding is a fundamental skill. * **Python:** Python 3 handles Unicode natively, making encoding issues less frequent than in Python 2. When reading data, explicitly specify the encoding: `with open('file.txt', 'r', encoding='utf-8') as f:`. When writing, do the same: `with open('output.txt', 'w', encoding='utf-8') as f:`. * **Encoding and Decoding:** The `encode()` method converts a string (Unicode code points) into bytes using a specified encoding, while `decode()` converts bytes into a string (Unicode). For example, `b'your_bytes'.decode('utf-8')` or `'your_string'.encode('utf-8')`. This is critical when dealing with raw bytes, especially from network streams or files. * **Identifying Encoding:** Libraries like `chardet` (a universal character encoding detector) can help guess the encoding of unknown byte sequences. This is particularly useful when you receive data without an explicit encoding declaration.Encoding and Web Scraping: A Critical Connection

The "Data Kalimat" explicitly mentions web scraping with BeautifulSoup and the need to change encoding to properly view content. This is a common challenge. When you crawl a website, you receive raw bytes. If these bytes are misinterpreted, you get "mojibake." Here's how it typically works in web scraping, building on the provided example: 1. **Fetch HTML:** You send an HTTP request to a URL and receive the raw HTML content as bytes. 2. **Identify Encoding:** The server *should* send an `Content-Type` header that specifies the character set (e.g., `charset=UTF-8`). If it does, you use that. If not, you might look for a `` tag within the HTML itself. 3. **Decode Bytes:** Once you know the encoding, you `decode` the raw bytes into a Unicode string. * **Example from Data Kalimat:** "위 처럼 인코딩 방식을 바꿔준 다음 html을 받고, BeautifulSoup으로 원하는 정보를 찾아주면 검사장 출신 로펌대표 성추행 폭로 “가해자가 전화하며 2 차· 3 차 위협… 왜 긴시간 말할 수 없었는지 관심을” 이런 식으로 제대로 보이는 것을 볼 수 있습니다. 참고로 위 내용은 한겨레 기사 크롤링하다가." * This translates to: "After changing the encoding method as above, receiving the HTML, and then finding the desired information with BeautifulSoup, you can see it displayed correctly, like 'Prosecutor-turned-law firm CEO accused of sexual harassment: "The perpetrator threatened me a second and third time on the phone... Why couldn't I speak for so long? Pay attention to that." For your information, the above content was obtained while crawling a Hankyoreh article.'" * This perfectly illustrates the process: You get the HTML (as bytes), change the encoding (decode the bytes with the correct charset), then use BeautifulSoup to parse the now-readable Unicode string. If the encoding is wrong, the Korean text would appear as "ë² ì³‡ 앨러"-like gibberish. This process is vital for ensuring data integrity. Imagine scraping financial data or legal documents where garbled text could lead to misinterpretations, incorrect analysis, or even legal liabilities. Correct encoding is the first step towards accurate data extraction.Preventing Future Encoding Headaches: Best Practices

While "ë² ì³‡ 앨러" can be fixed, preventing it is always better. 1. **Standardize on UTF-8:** For all new projects, documents, databases, and web applications, standardize on UTF-8. It is the most widely supported and flexible encoding, capable of representing all Unicode characters. 2. **Declare Encoding Explicitly:** Always declare the encoding of your documents and data streams. * For HTML: `` in the `` section. * For HTTP responses: Set the `Content-Type` header (e.g., `Content-Type: text/html; charset=utf-8`). * For text files: Use a Byte Order Mark (BOM) for UTF-8 (though often not strictly necessary for

ì?¤ë²?ì??í ¼ - Decks - Marvel Snap Zone

Kỳ Tích Tình Yêu - The Infinite Love | FPT Play

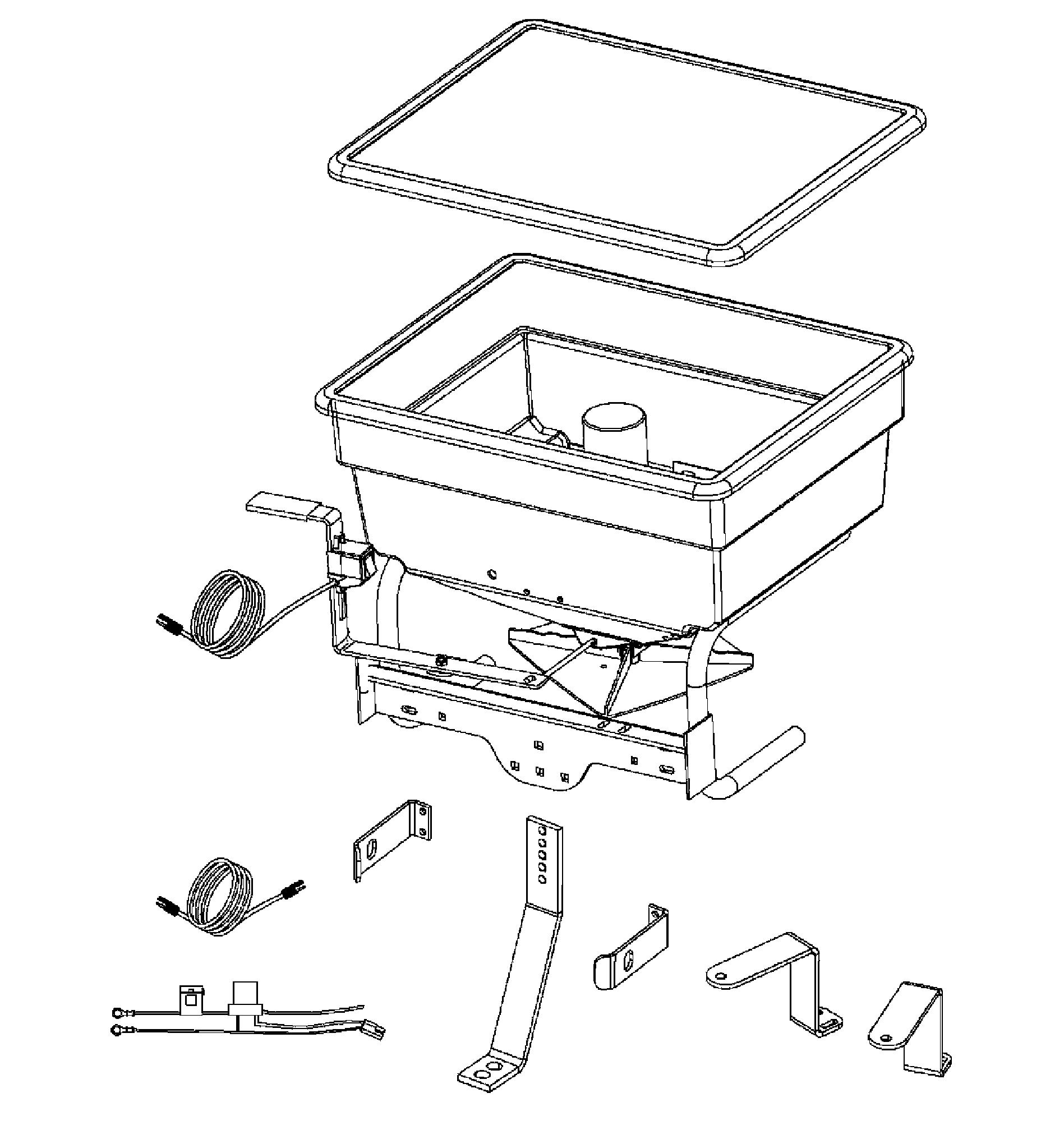

John Deere LP3301 Mounted Spreader Operator’s Manual Instant Download